Optimizers¶

-

class

macaw.optimizers.GradientDescent(fun, gradient, gamma=0.001)[source]¶ Implements a simple gradient descent algorithm to find local minimum of functions.

Attributes

fun (callable) The function to be minimized gradient (callable) The gradient of the fun gamma (float) Learning rate Methods

compute(x0[, fun_args, n, xtol, ftol, gtol])save_state(x0, funval, n_iter, status)

-

class

macaw.optimizers.CoordinateDescent(fun, gradient, gamma=0.001)[source]¶ Implements a sequential coordinate descent algorithm to find local minimum of functions.

Attributes

fun (callable) The function to be minimized gradient (callable) The gradient of the fun gamma (float) Learning rate Methods

compute(x0[, fun_args, n, xtol, ftol, gtol])save_state(x0, funval, n_iter, status)

-

class

macaw.optimizers.MajorizationMinimization(fun, optimizer='gd', **kwargs)[source]¶ Implements a Majorization-Minimization scheme.

Attributes

fun (object) Objective function to be minimized. Note that this must be an object that contains the following methods:: * evaluate: returns the value of the objective function * surrogate_fun: returns the value of an appropriate surrogate function * gradient_surrogate: return the value of the gradient of the surrogate function optimizer (str) Specifies the optimizer to use during the Minimization step. Options are:: * ‘gd’ : Gradient Descent * ‘cd’ : Coordinate Descent kwargs (dict) Keyword arguments to be passed to the optimizer. Methods

compute(x0[, n, xtol, ftol])save_state(x0, funval, n_iter, status)

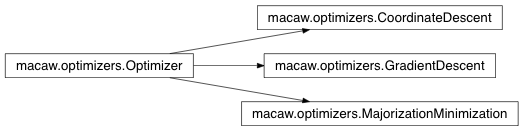

Inheritance Diagram¶